I am the lead programmer on Seattle Academy’s MATE Underwater robotics team. Over the last 6 months I have been programming a web dashboard that we use to control and debug our robot.

The code is on GitHub here: https://github.com/redshiftrobotics/blueshift2020

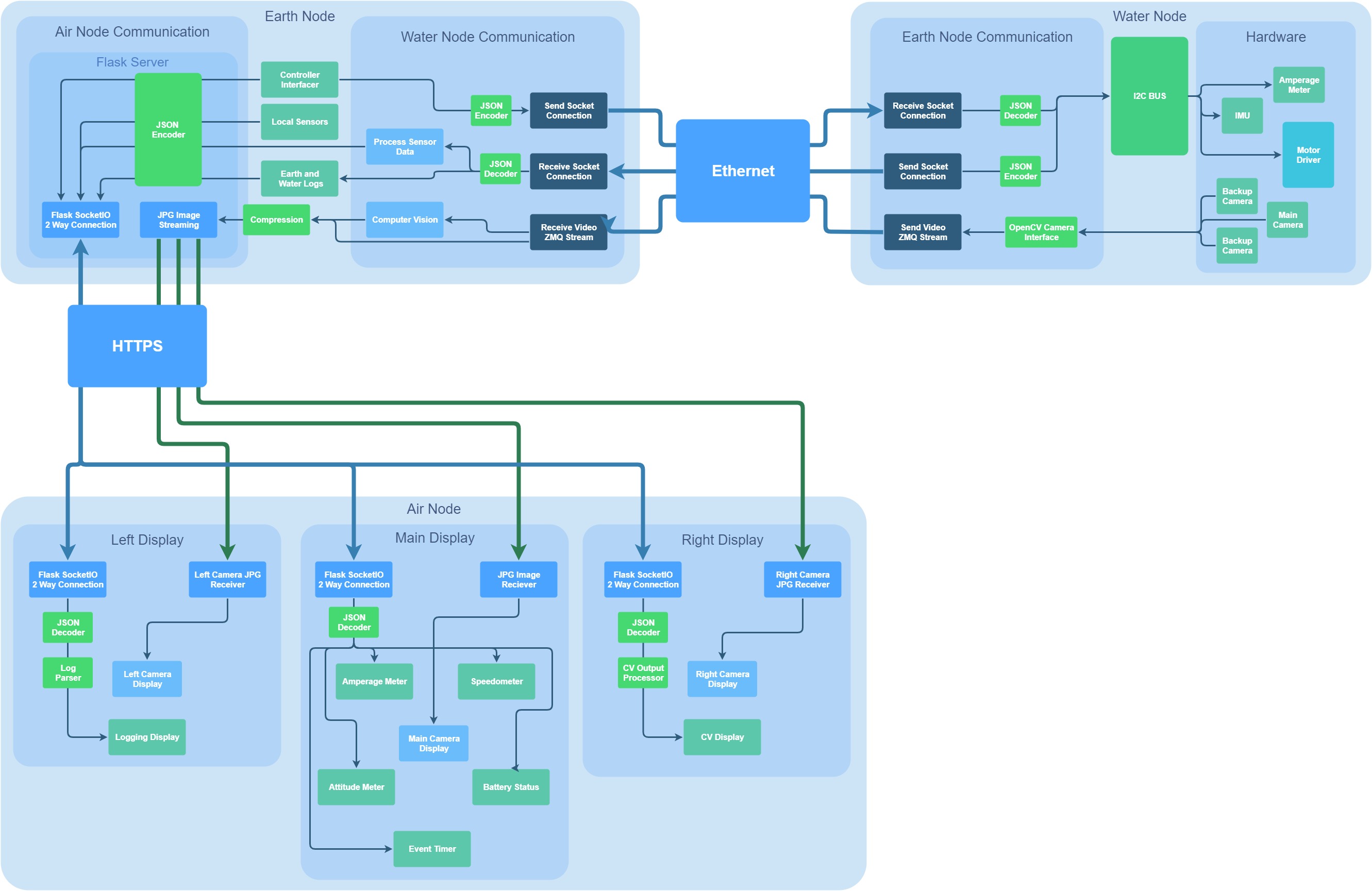

This code is split up into 3 sections called nodes. The three nodes are water, air, and earth. They are split across two computers. The air and earth nodes are on the computer above the water in the control station. The water node runs on the computer under the water that is attached to the robot. Each node has a different job in helping the robot function. The earth node is the hub that everything goes through. It communicates with both the water and air nodes, ensuring that data goes to the right place. As it is the most computationally powerful, it also runs all of the computer vision, handles controller input, and is in charge of logging. The water

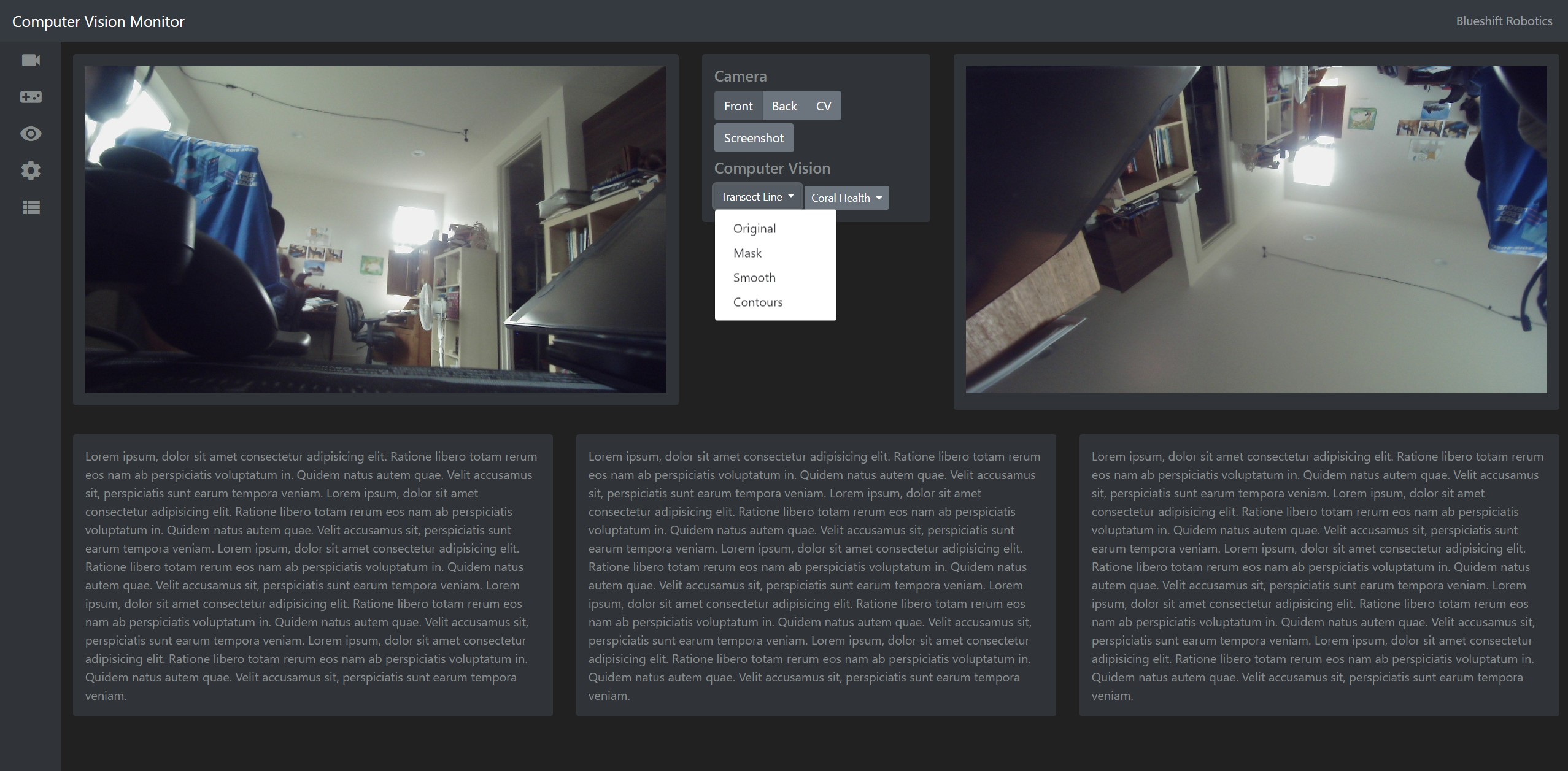

node interfaces with all of the hardware on the robot itself. That includes directly controlling the motors, reading data from sensors, and streaming camera data. Some of the sensor data needs to be pre-processed which it handles too. It sends all of its processed data to the earth node. The air node is a front end to display the information captured and processed by the water and earth nodes. It displays sensor measurements, camera feeds, logs, and computer vision results.

The air node is split up into three sections, each of which are displayed on a separate monitor. This is a detailed diagram showing the structure of the program and flow of data.

The air node itself is split up into three windows, each on a separate monitor. The left display is real time video stream from the robot:

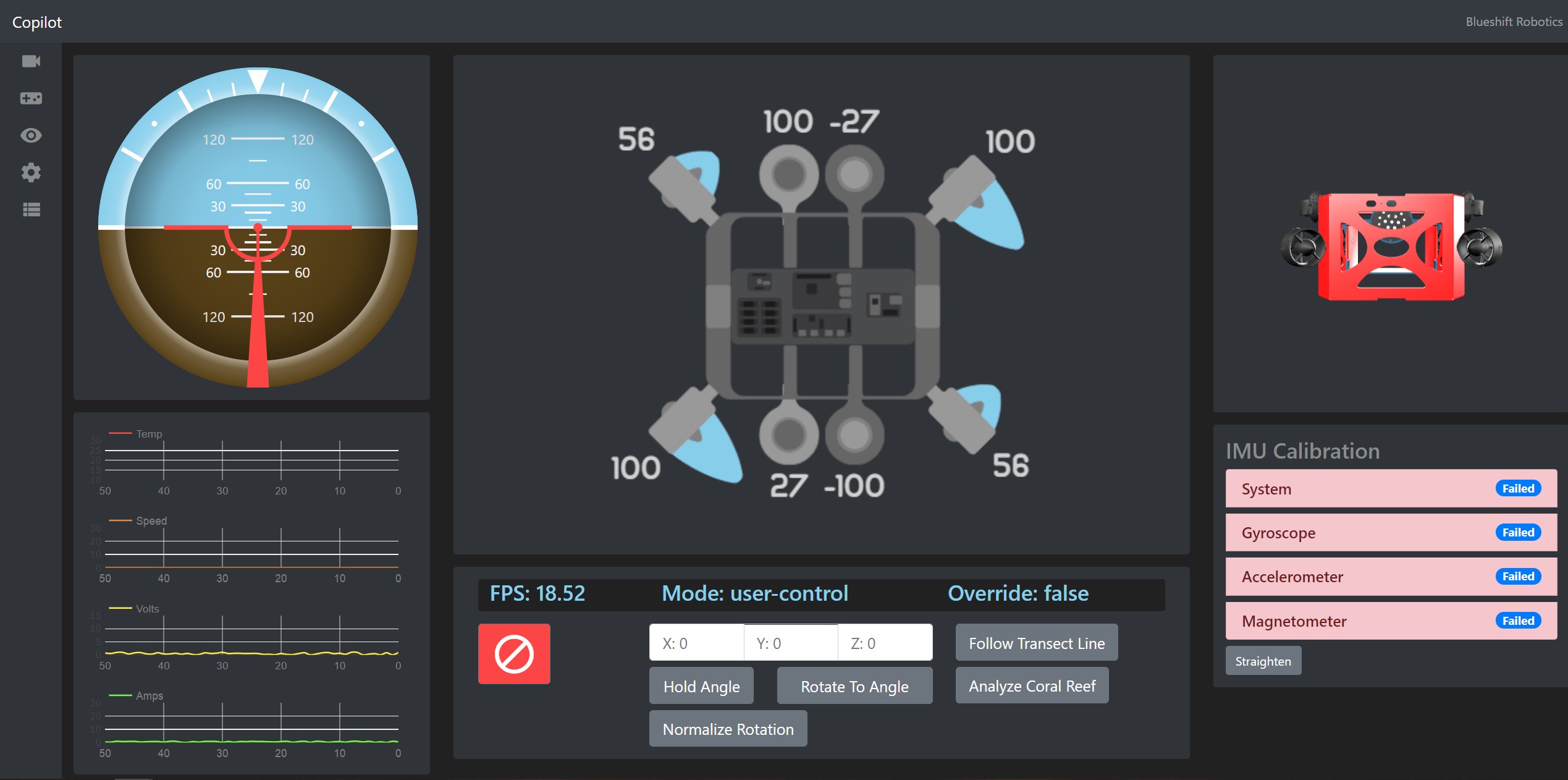

The middle monitor displays sensor outputs, shows the current state of the robot and helps the driver control the robot:

The panel on the top right is a full 3d model of our robot, that orients itself based on an onboard IMU.

3d model updating based on IMU

The right display is meant to be used by a programmer (namely myself) to run and debug computer vision algorithms:

The bottom three panels will contain tools for comparing coral reefs. I am currently in the process of implementing them.

Algorithm for line detection and following